Welcome to my blog, where I discuss about mathematics, physics, deep learning, and machine learning. You will find articles and discussions about these topics as well as projects I am actively working on. I hope you enjoy it, and please feel free to leave a reaction or a comment at the end of the posts. This helps me to improve the content.

Tags

All Posts Bayes Bayesian Neural Network Classification Convolutional Neural Network Decision Tree K-means clustering Logistic Regression Multilayer Perceptron Physics-Informed Neural Network Probability Random Forest Regression XGBoostAll Posts

Uncertainty Estimation for Taxi Trip Duration Predictions in NYC

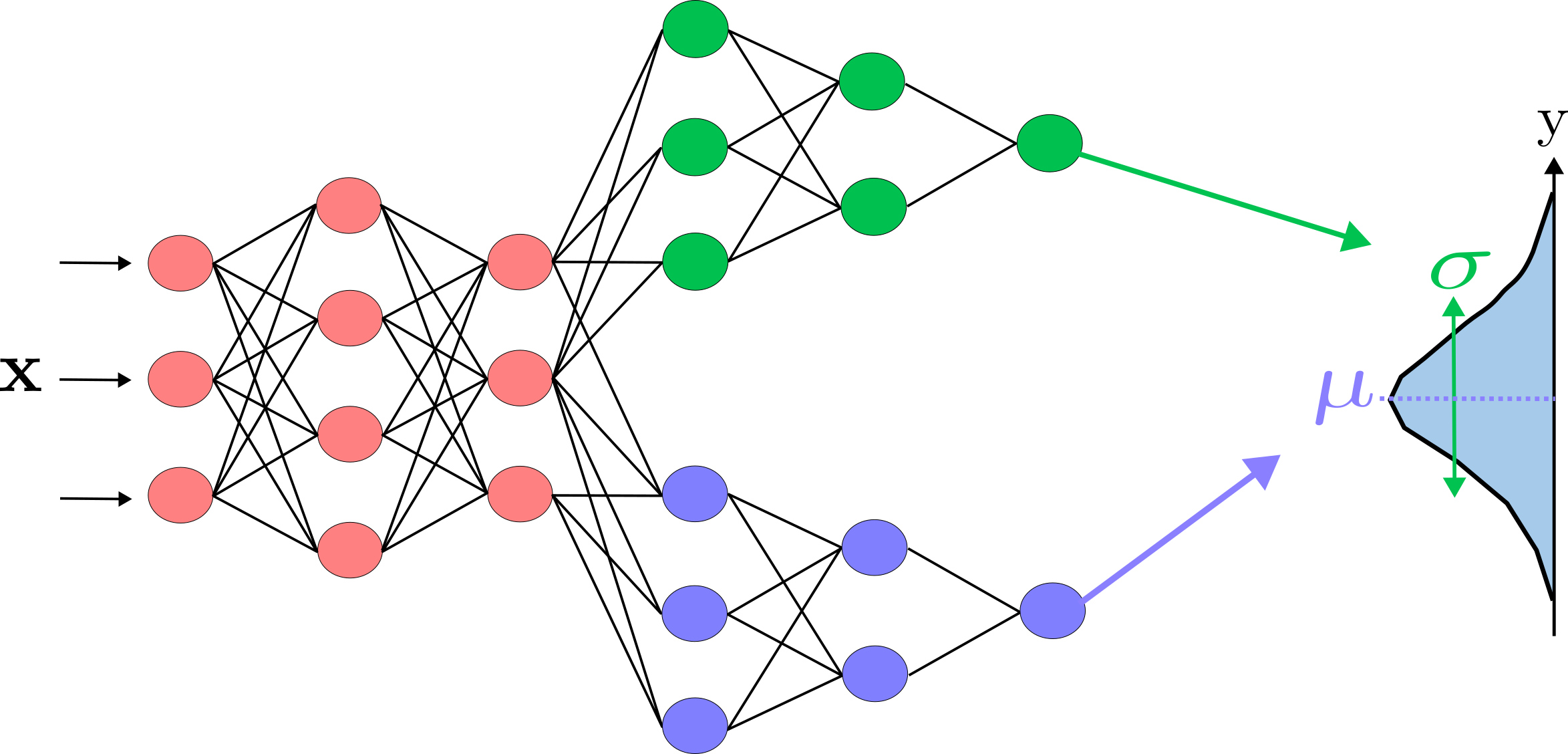

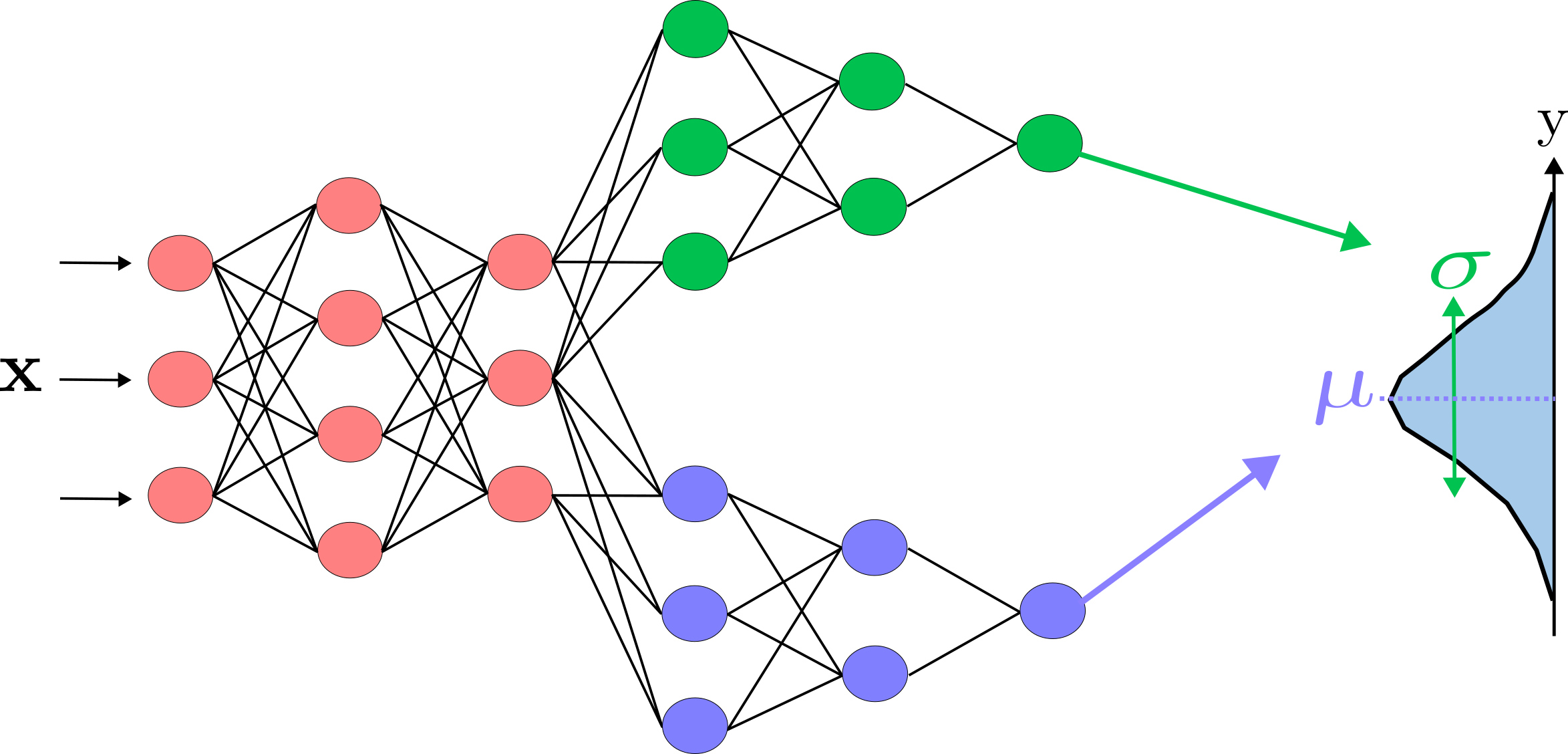

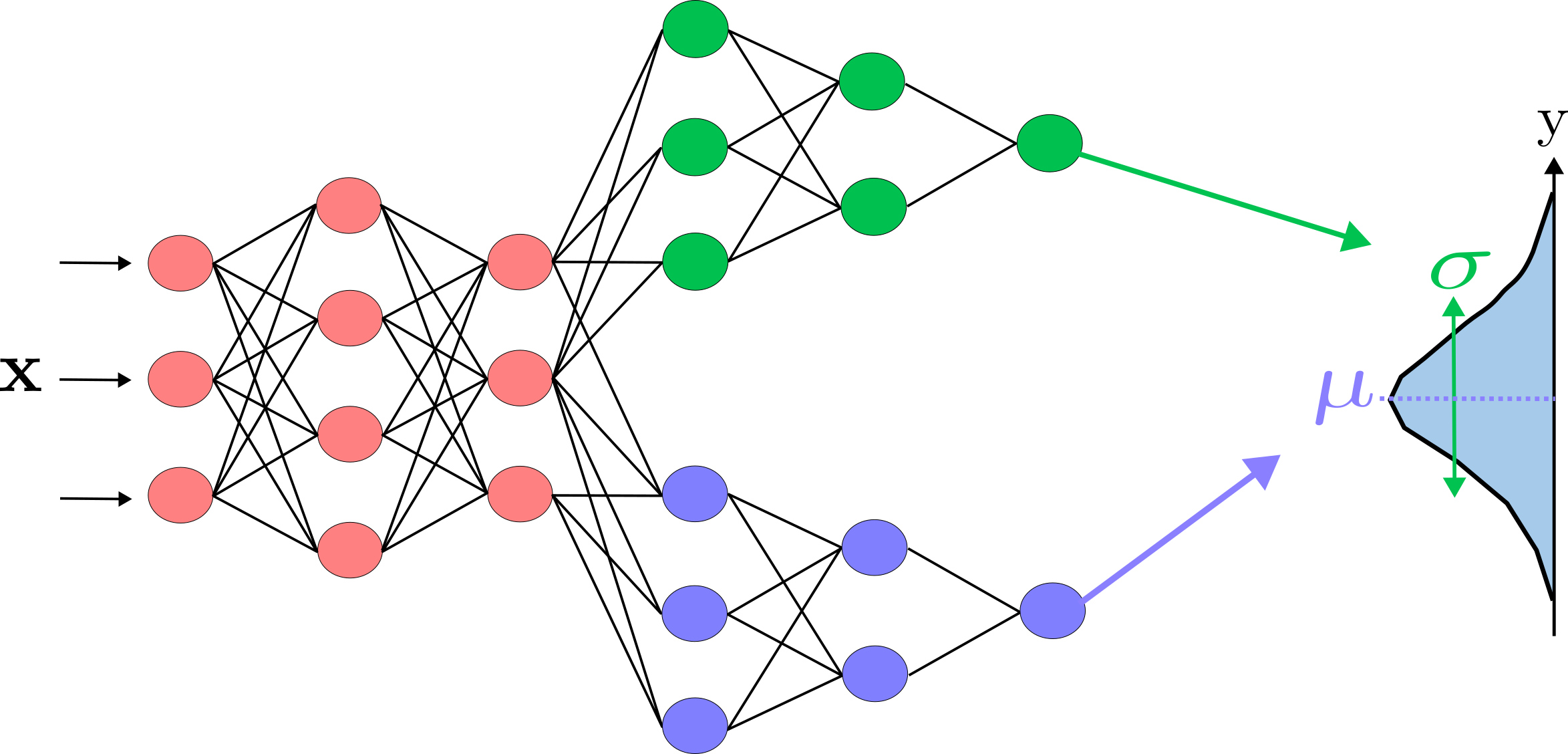

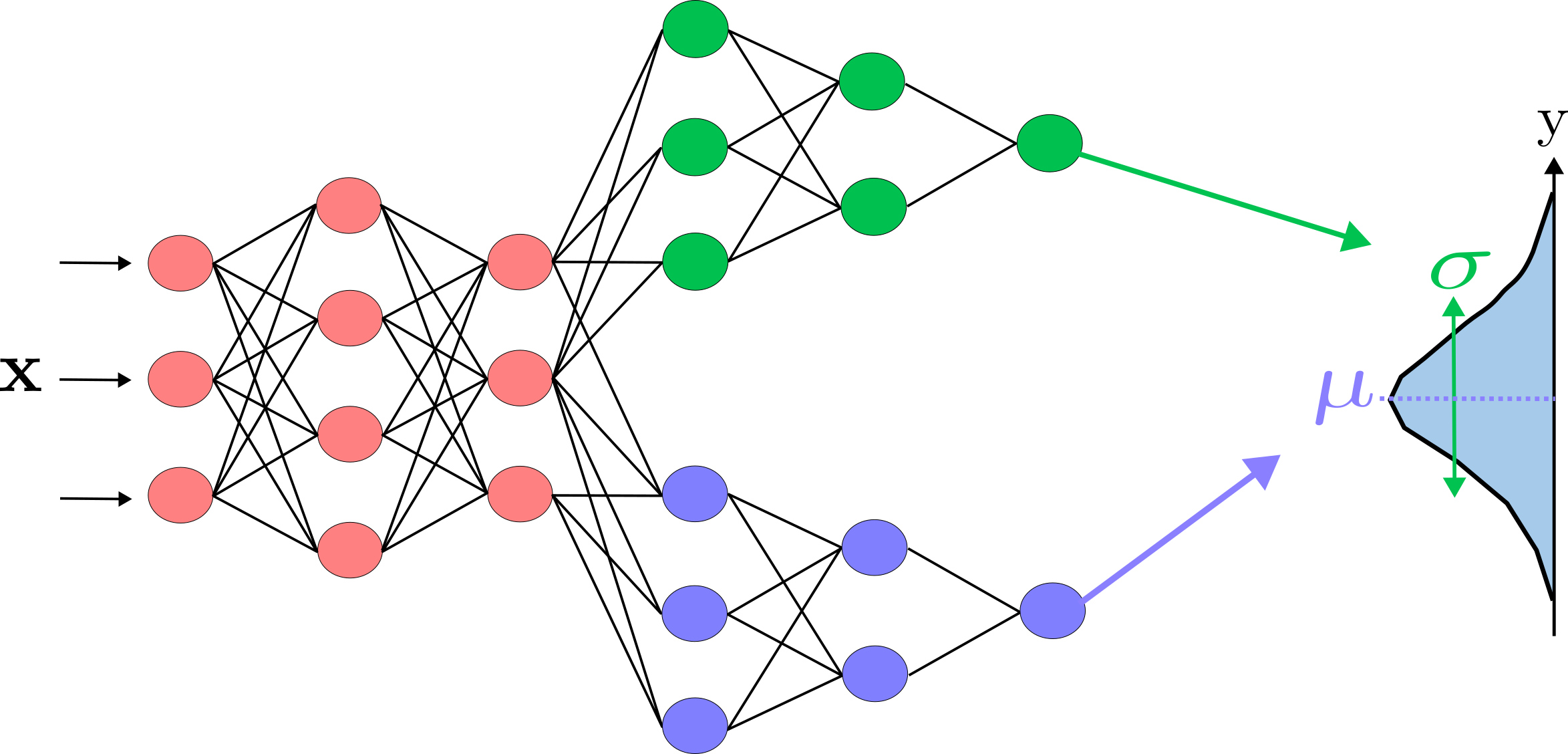

29 Jun 2024 - Multilayer Perceptron, Bayesian Neural Network, and RegressionThis project aims to analyze taxi trip durations in NYC for the year 2023. The dataset contains almost 35 million records. We perform brief preprocessing to remove outliers and errors, using DuckDB and SQL to extract 2 million representative records. After preprocessing, we use this data to train three models: a simple neural network without uncertainty estimation, a Bayesian neural network (BNN), and a dual-headed Bayesian neural network (Dual BNN). We compare these models, highlighting that the Dual BNN can vary its uncertainty estimates based on the input data, unlike the simple BNN which estimates a constant level of uncertainty, and the simple neural network which provides only point estimates. The Dual BNN's ability to provide varying uncertainty estimates makes it particularly advantageous for predicting taxi trip durations, as it offers more informative and reliable predictions, crucial for effective decision-making in real-world applications.

The Theory Behind Tree Based Algorithms

30 Apr 2024 - Decision Tree and Random ForestA decision tree is a recursive model that employs partition-based methods to predict the class for each instance. The process starts by splitting the dataset into two partitions based on metrics like information gain for classification or variance reduction for regression. These partitions are further divided recursively, until a state is achieved in which most instances within a partition belong to the same class (for classification) or have similar values (for regression). Decision trees also can be extended into ensemble models such as random forests, which combine multiple trees to improve predictive accuracy and reduce overfitting.

Analysis of Taxi Trip Duration in NYC

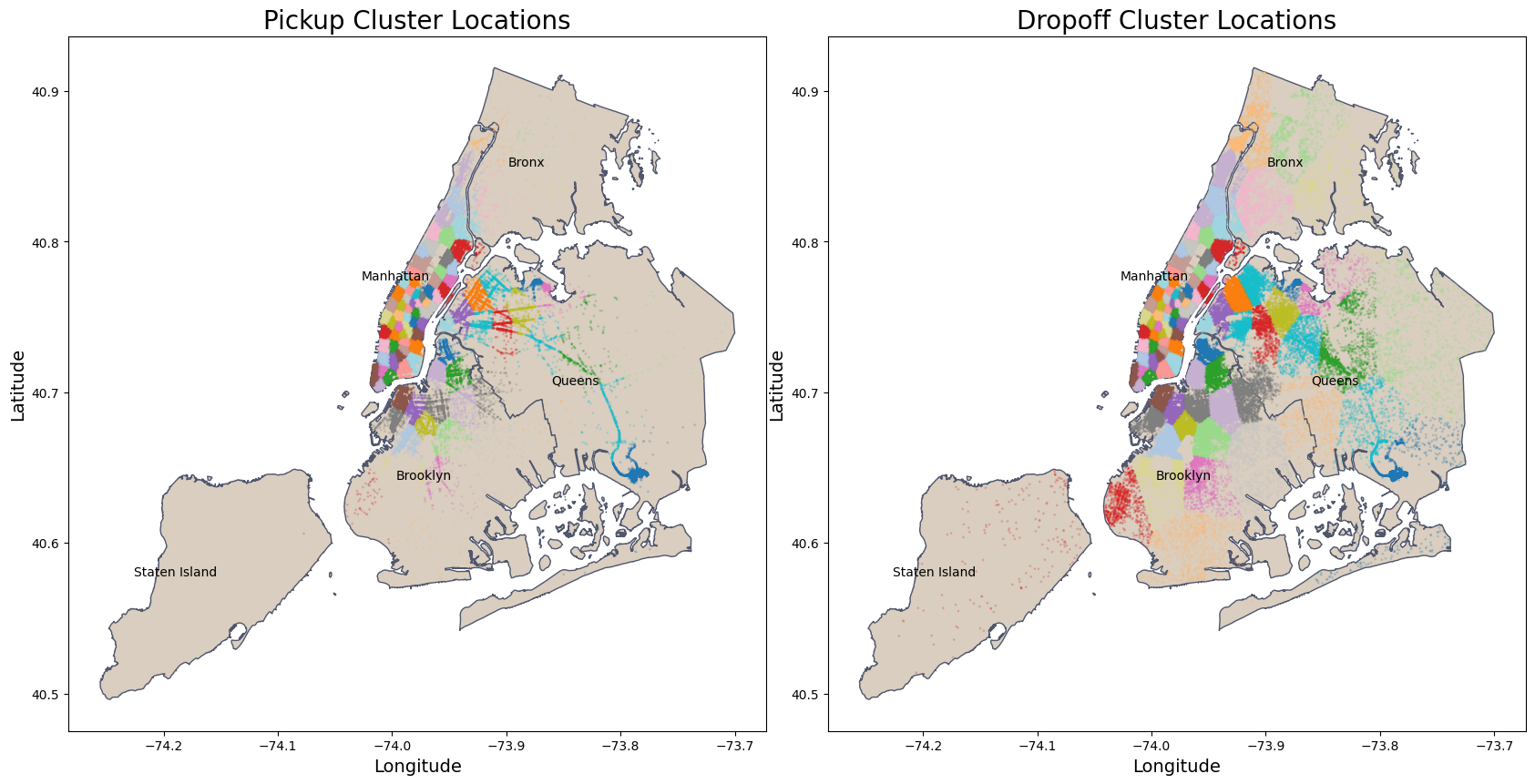

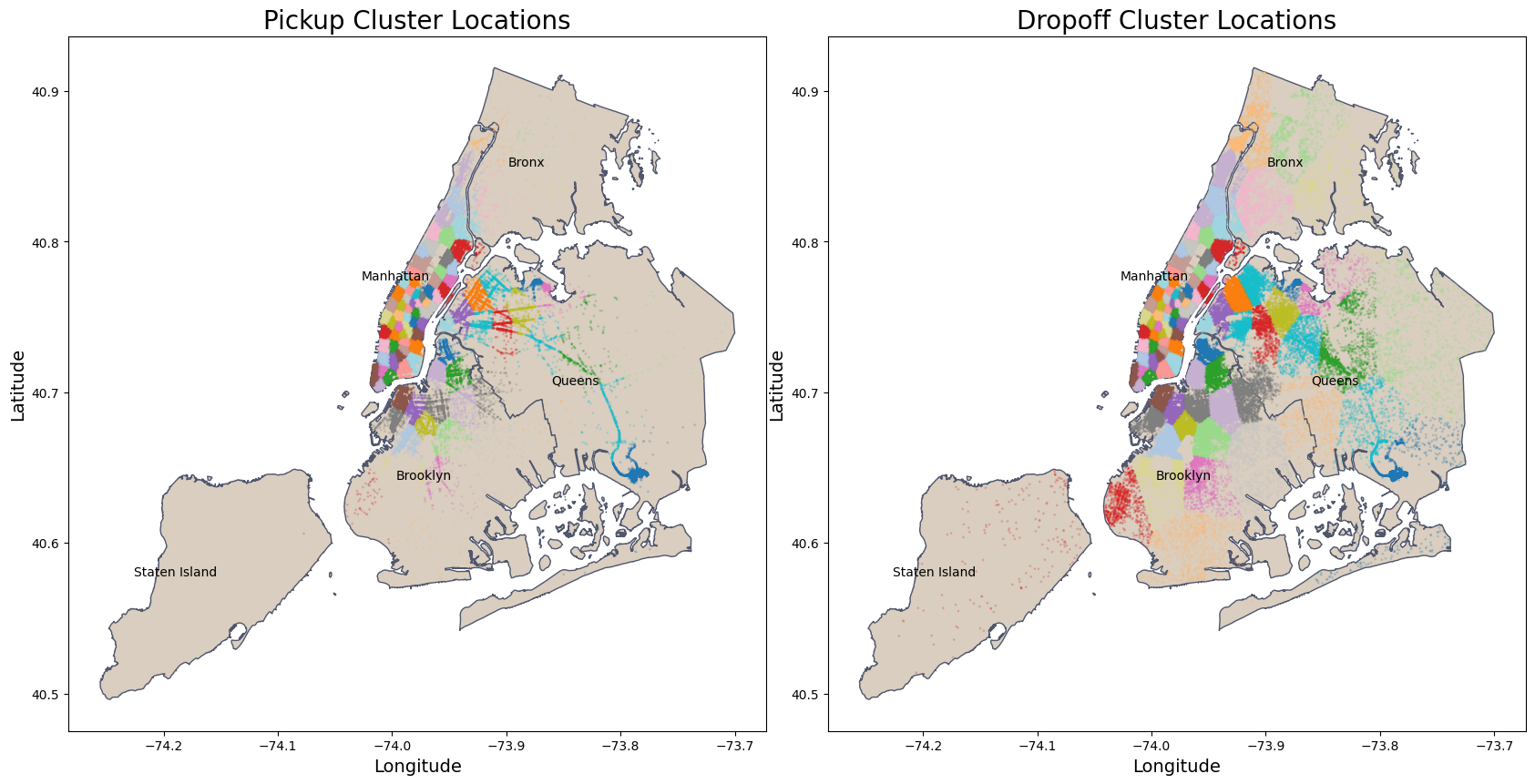

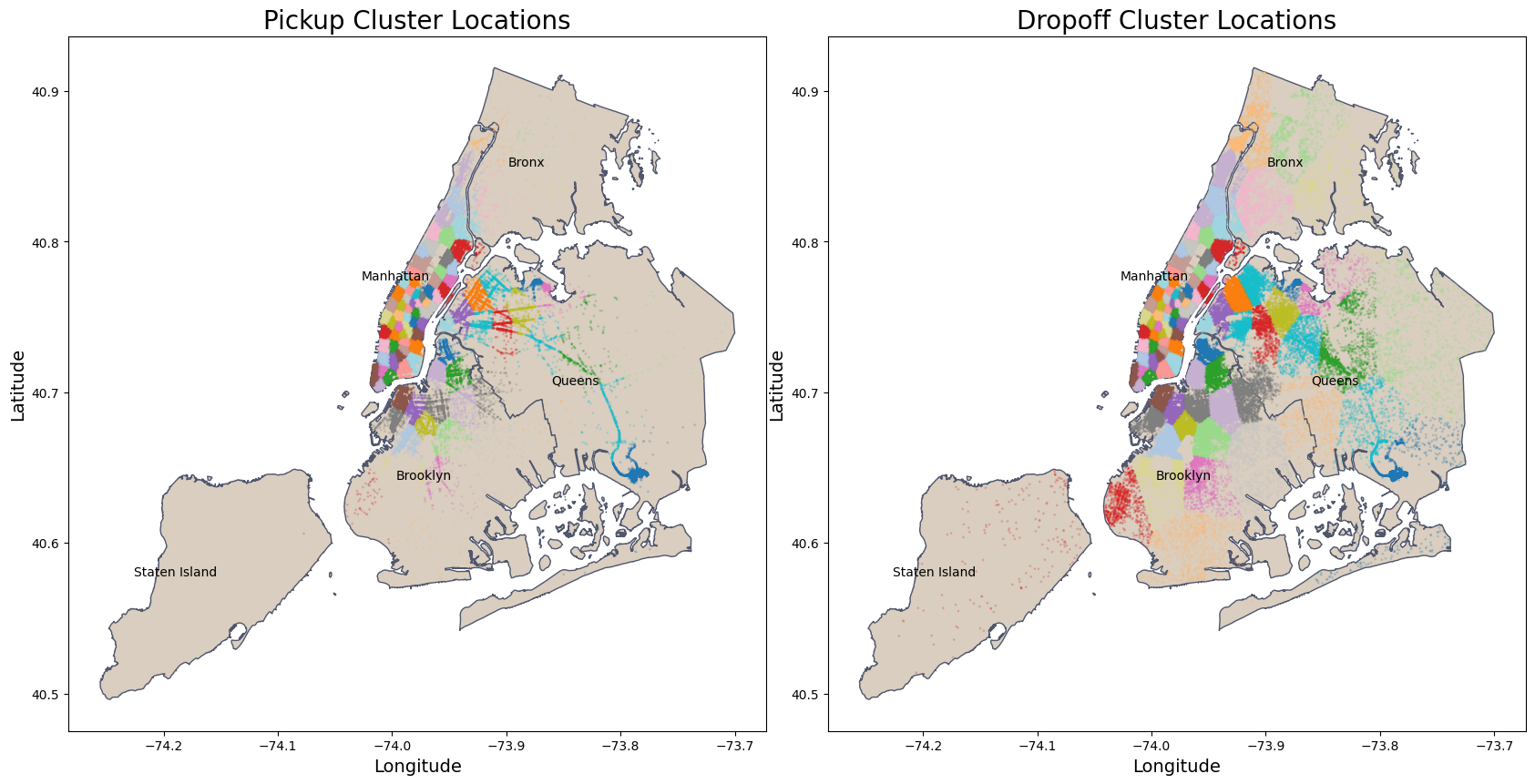

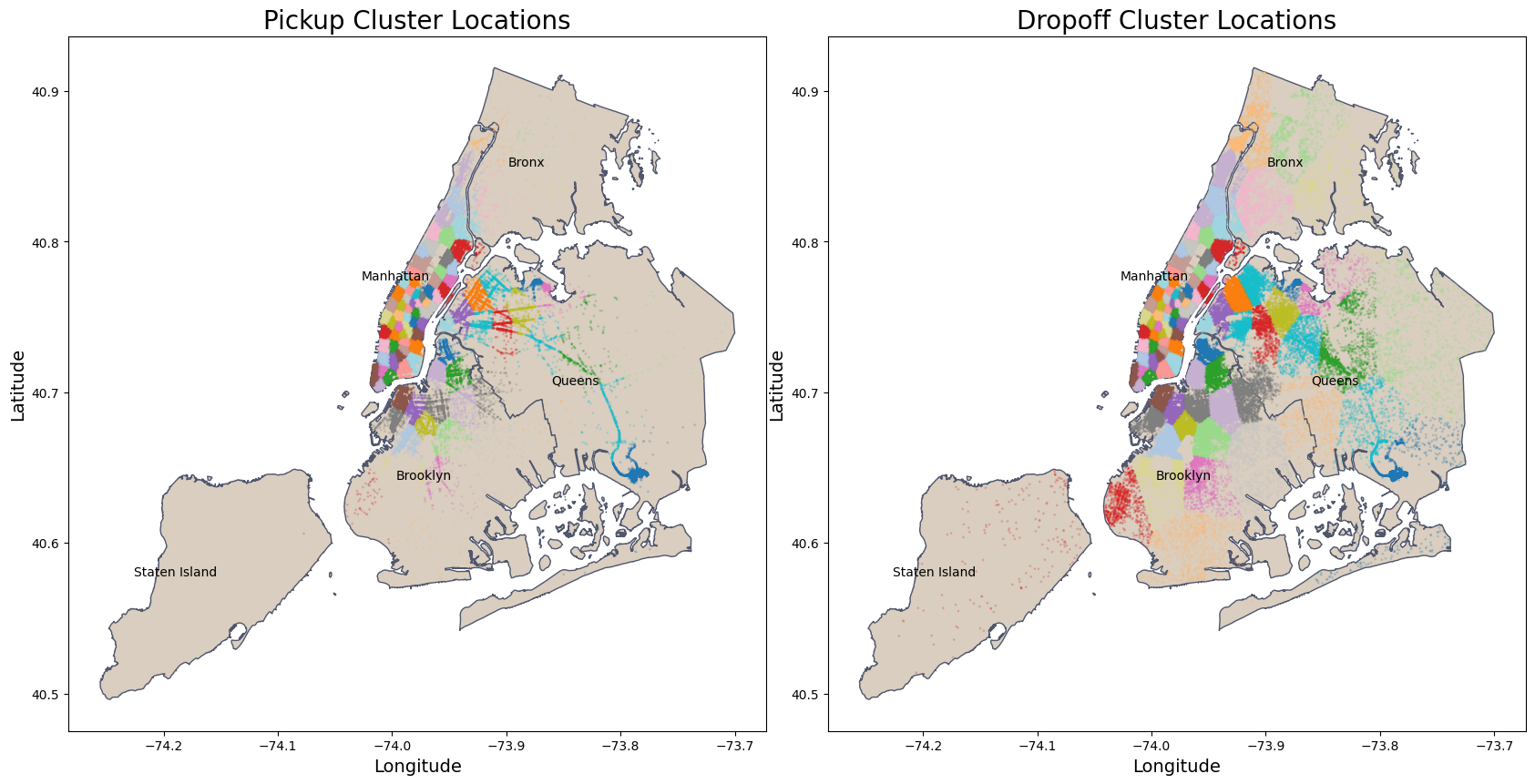

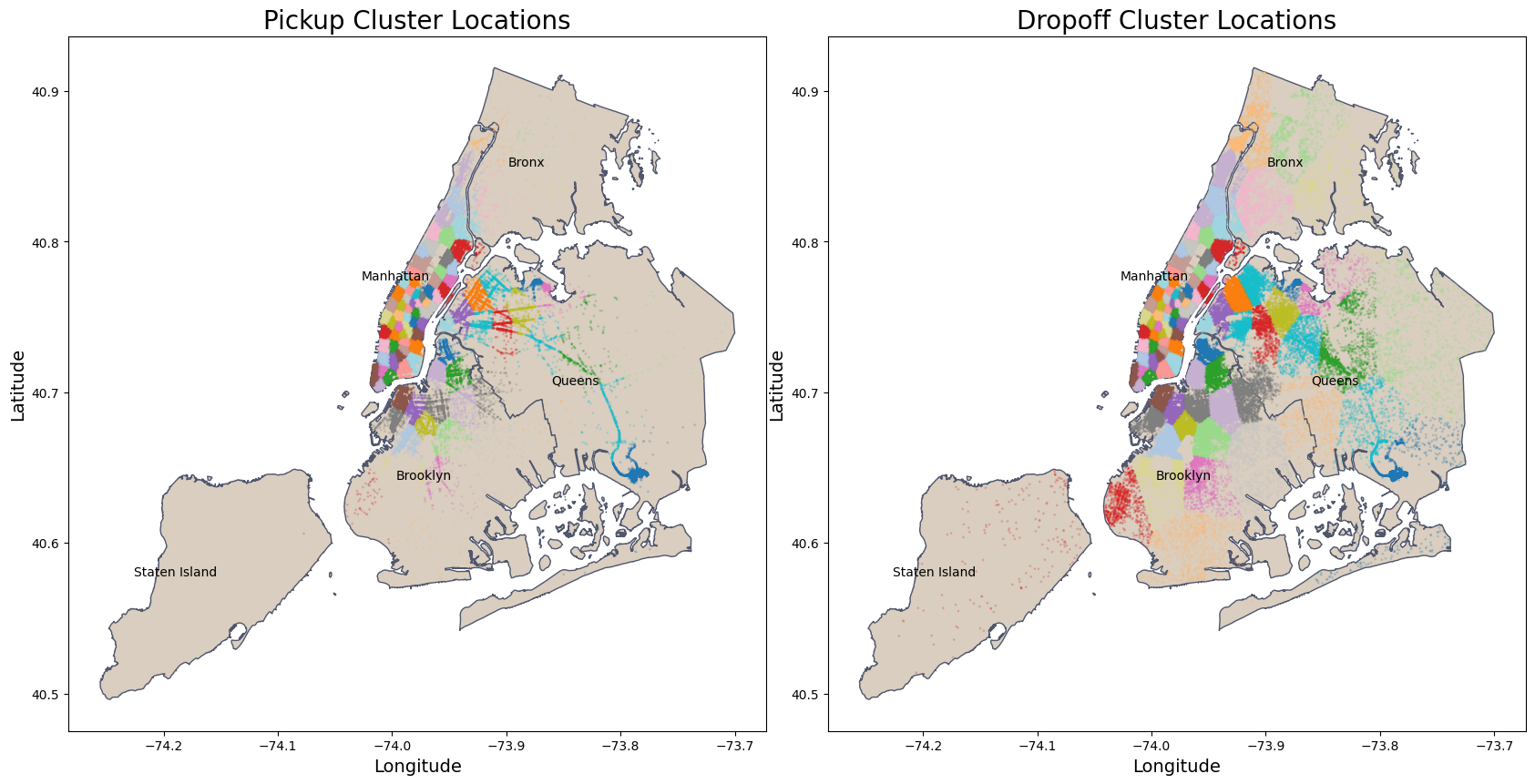

22 Jan 2024 - Regression, XGBoost, Decision Tree, and K-means clusteringIn this project, we will build a machine learning model to predict the trip duration of taxi rides in New York City. The dataset is from Kaggle. The original dataset have approximately 1.4 million entries for the training set and 630k for the test set, although only the training set is used in this project. The dataset is then preprocessed, outliers are removed, and new features are created, followed by splitting it into train, test, and validation sets. The goal is to predict the trip duration of taxi trips in New York City. The evaluation metric used for this project is the Root Mean Squared Logarithmic Error (RMSLE). The RMSLE is calculated by taking the log of the predictions and actual values. This metric ensures that errors in predicting short trip durations are less penalized compared to errors in predicting longer ones. For this purpose, we employ three types of machine learning models: mini-batch k-means to create new cluster features for the locations, and the Decision Tree Regressor and XGBoost to predict the trip duration.

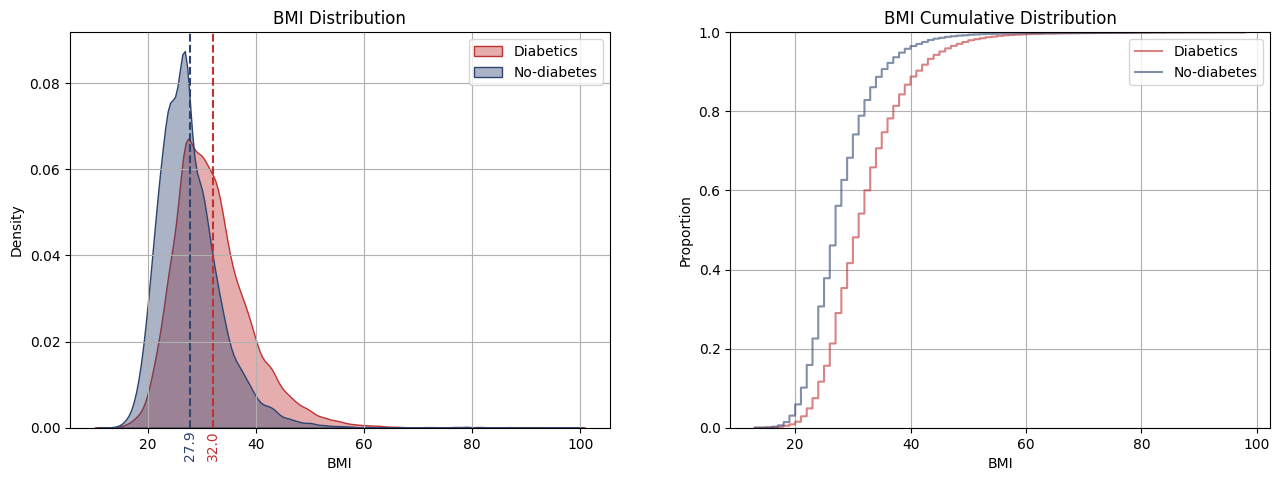

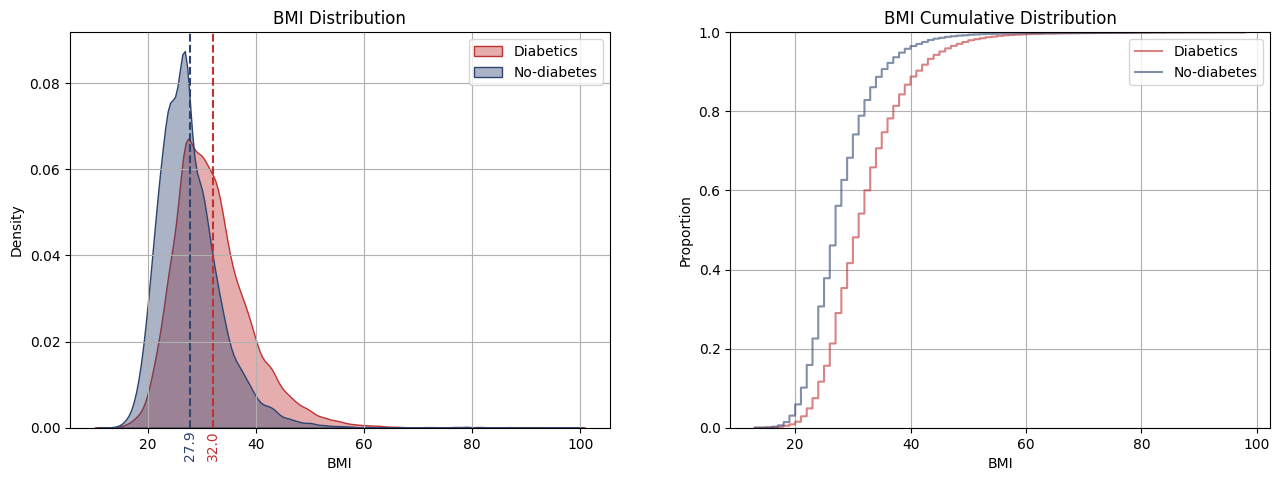

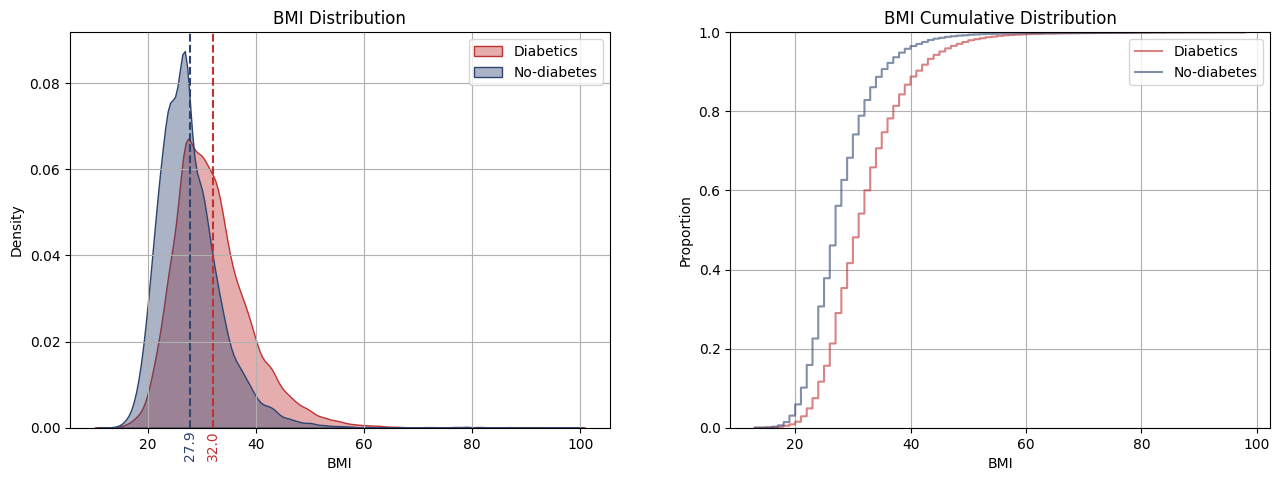

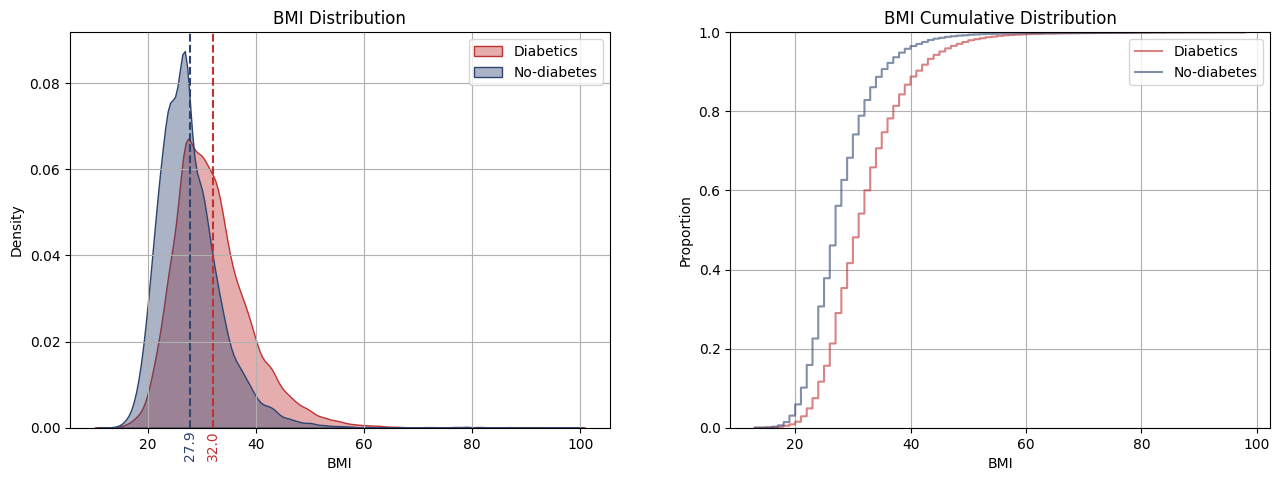

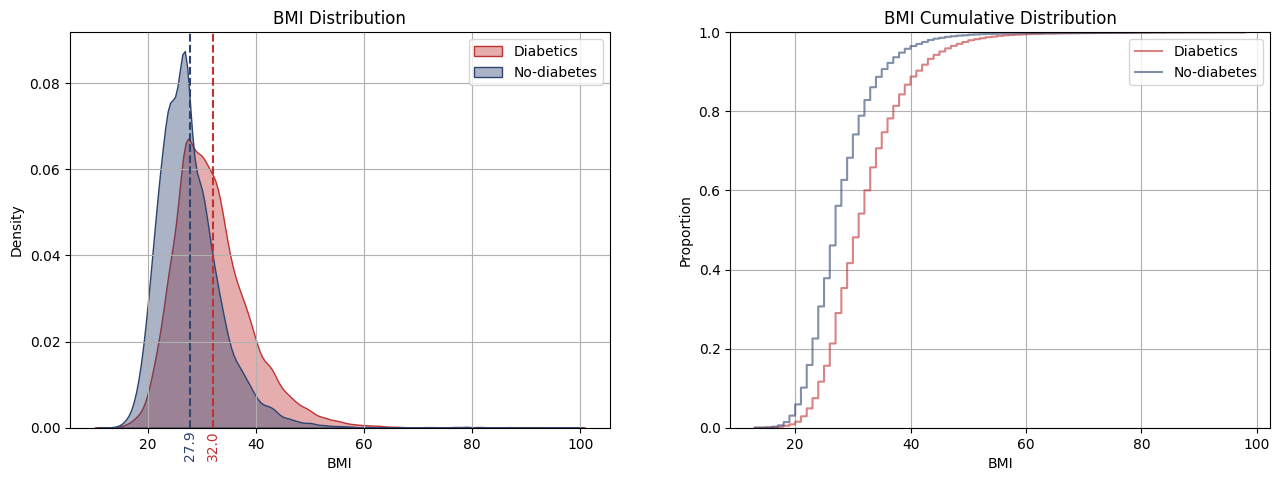

Analysis of Diabetes Indicators

13 Dec 2023 - Classification, Random Forest, Decision Tree, and Logistic RegressionDiabetes is among the most prevalent chronic diseases in the United States, impacting millions of Americans each year. Diabetes is a chronic disease in which individuals lose the ability to effectively regulate levels of glucose in the blood, and can lead to reduced quality of life and life expectancy. This project uses the Diabetes Health Indicators dataset, available on kaggle. The project seeks answer the following questions: Which risk factors most strongly predict diabetes? Can a subset of the risk factors to accurately predict whether an individual has diabetes?

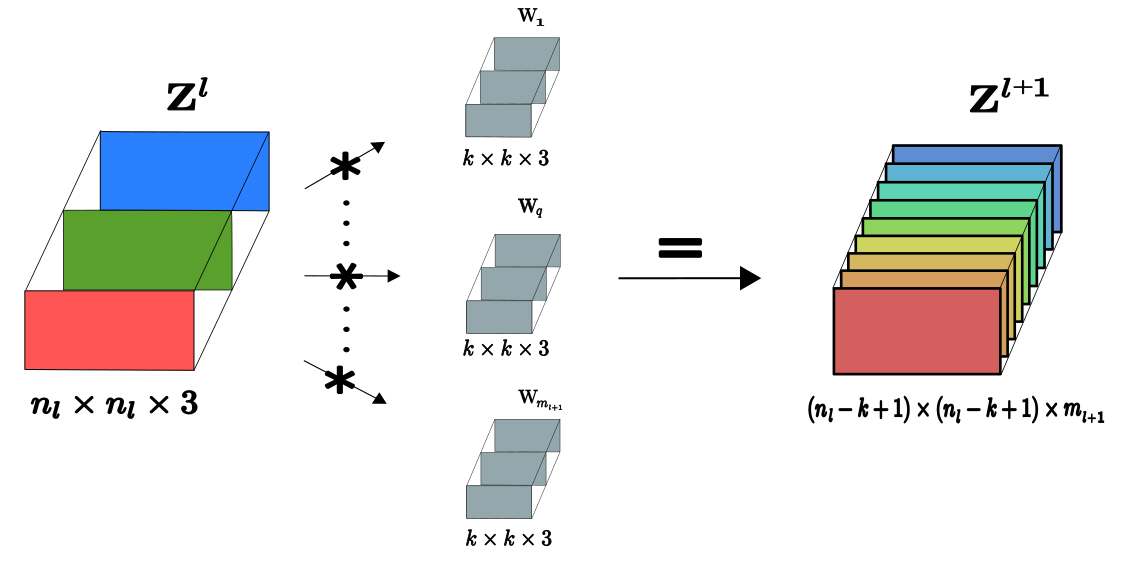

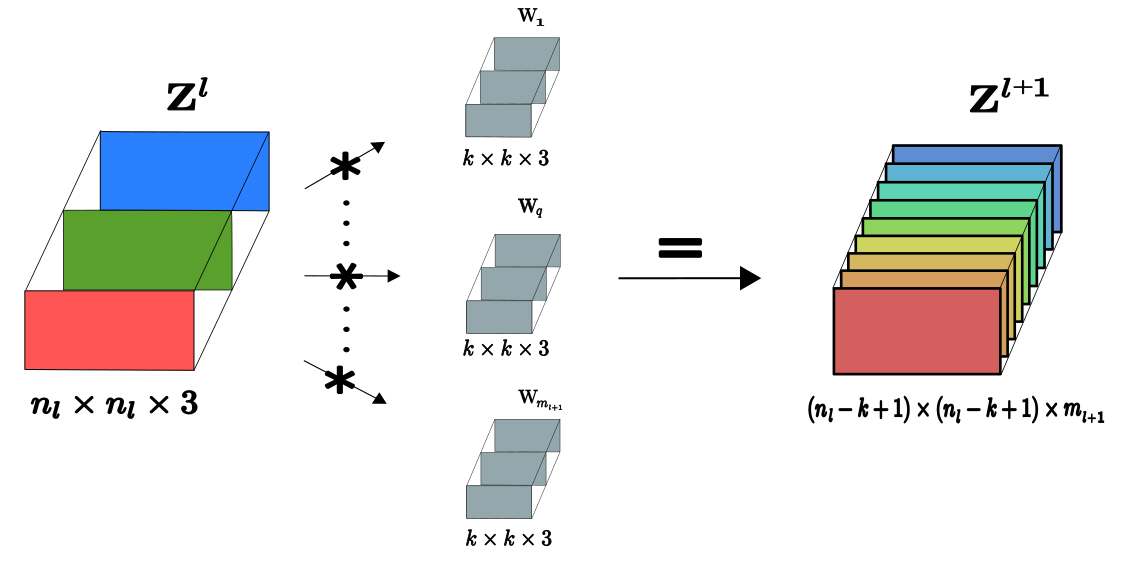

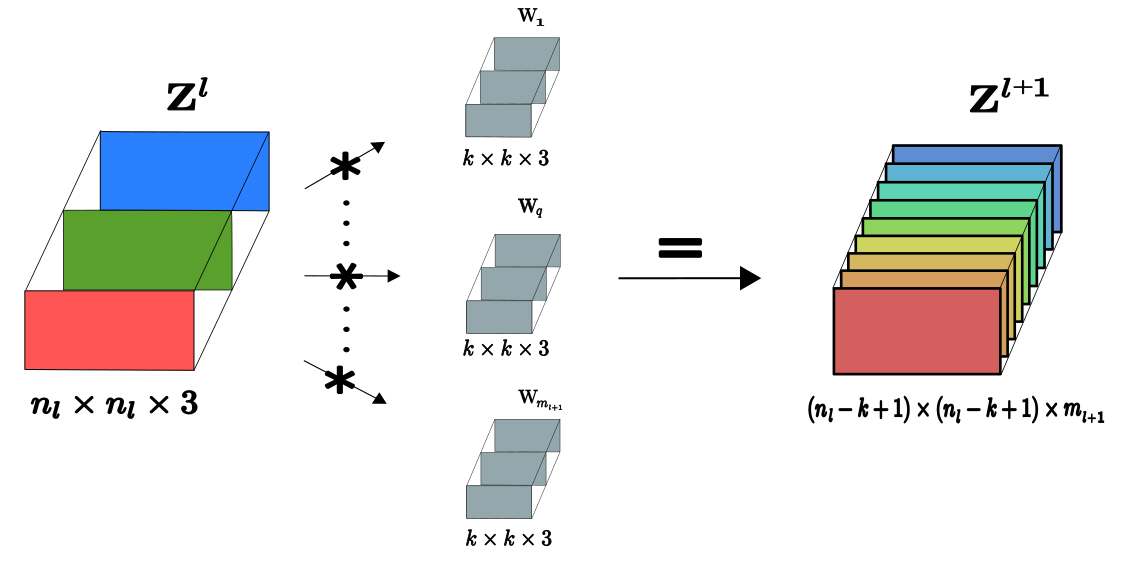

Understanding Convolutional Layers in a Convolutional Neural Network

27 Nov 2023 - Convolutional Neural Network and ClassificationA Convolutional Neural Network (CNN) is essentially a feedforward Multi-Layer Perceptron (MLP) that is designed to recognize local patterns and sparsity (not all connections between neurons are active or significant) in input data. Like the MLP, each neuron is connected to others through learnable weights. These weights are adjusted during training to optimize the network's performance for a specific task. The main difference between MLPs and CNNs is that the latter is developed for processing multidimensional data, such as images or videos. Also, CNNs have a more diverse set of specialized layers, including convolutional layers, pooling layers, and upsampling layers, which are optimized for processing spatial (image) and temporal data (video). In this article, we will focus on the convolutional layer and the max pooling layers, which are the core of CNNs.

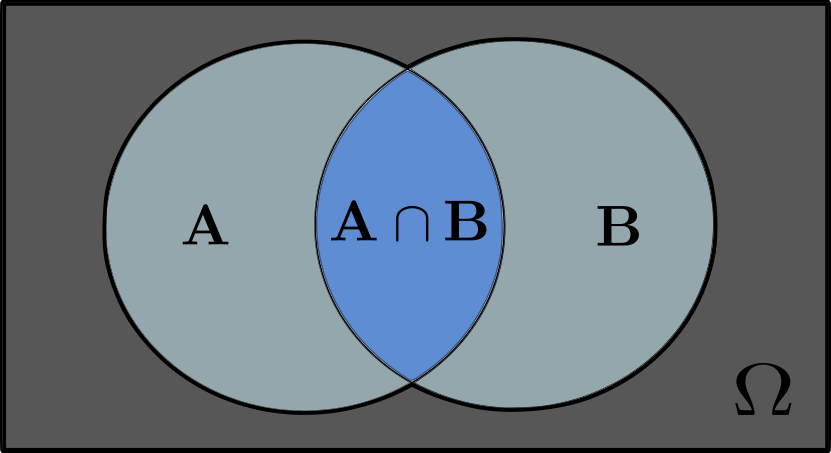

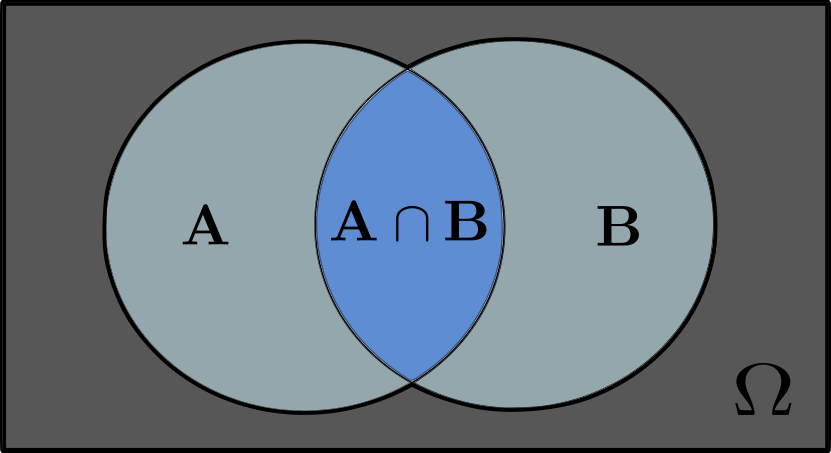

Probabilities and Bayes Rule

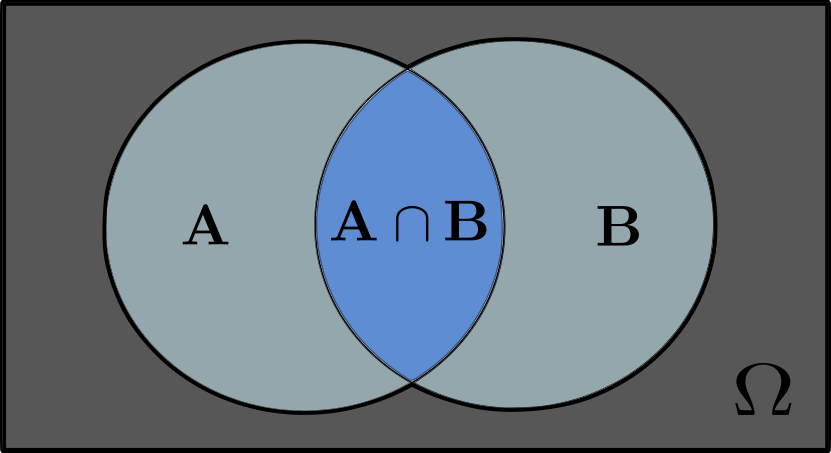

22 Nov 2023 - Probability and BayesHere, I explain the basics of probabilities and Bayes' rule, aiming to provide an intuitive understanding of probabilities through the concept of set theory. Each section includes an example to illustrate practical comprehension of probabilities.

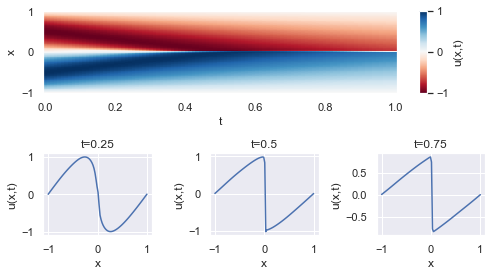

Solving the 1D Burgers' Equation with Physics-Informed Neural Networks (PINNs)

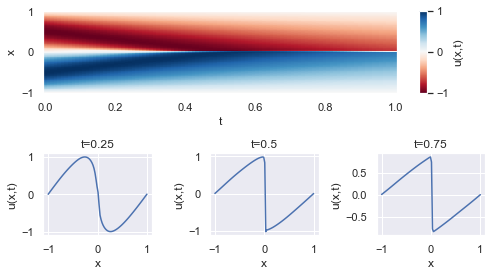

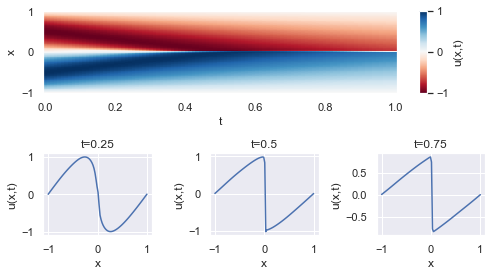

15 Jul 2023 - Multilayer Perceptron and Physics-Informed Neural NetworkThis project aims to solve the one-dimensional Burgers' equation using a Physics-Informed Neural Network (PINN). The Burgers' equation is a fundamental partial differential equation (PDE) in applied mathematics, used to model phenomena in fluid mechanics, nonlinear acoustics, and gas dynamics

Posts tagged "Bayes"

Probabilities and Bayes Rule

22 Nov 2023 - Probability and BayesHere, I explain the basics of probabilities and Bayes' rule, aiming to provide an intuitive understanding of probabilities through the concept of set theory. Each section includes an example to illustrate practical comprehension of probabilities.

Posts tagged "Bayesian Neural Network"

Uncertainty Estimation for Taxi Trip Duration Predictions in NYC

29 Jun 2024 - Multilayer Perceptron, Bayesian Neural Network, and RegressionThis project aims to analyze taxi trip durations in NYC for the year 2023. The dataset contains almost 35 million records. We perform brief preprocessing to remove outliers and errors, using DuckDB and SQL to extract 2 million representative records. After preprocessing, we use this data to train three models: a simple neural network without uncertainty estimation, a Bayesian neural network (BNN), and a dual-headed Bayesian neural network (Dual BNN). We compare these models, highlighting that the Dual BNN can vary its uncertainty estimates based on the input data, unlike the simple BNN which estimates a constant level of uncertainty, and the simple neural network which provides only point estimates. The Dual BNN's ability to provide varying uncertainty estimates makes it particularly advantageous for predicting taxi trip durations, as it offers more informative and reliable predictions, crucial for effective decision-making in real-world applications.

Posts tagged "Classification"

Analysis of Diabetes Indicators

13 Dec 2023 - Classification, Random Forest, Decision Tree, and Logistic RegressionDiabetes is among the most prevalent chronic diseases in the United States, impacting millions of Americans each year. Diabetes is a chronic disease in which individuals lose the ability to effectively regulate levels of glucose in the blood, and can lead to reduced quality of life and life expectancy. This project uses the Diabetes Health Indicators dataset, available on kaggle. The project seeks answer the following questions: Which risk factors most strongly predict diabetes? Can a subset of the risk factors to accurately predict whether an individual has diabetes?

Understanding Convolutional Layers in a Convolutional Neural Network

27 Nov 2023 - Convolutional Neural Network and ClassificationA Convolutional Neural Network (CNN) is essentially a feedforward Multi-Layer Perceptron (MLP) that is designed to recognize local patterns and sparsity (not all connections between neurons are active or significant) in input data. Like the MLP, each neuron is connected to others through learnable weights. These weights are adjusted during training to optimize the network's performance for a specific task. The main difference between MLPs and CNNs is that the latter is developed for processing multidimensional data, such as images or videos. Also, CNNs have a more diverse set of specialized layers, including convolutional layers, pooling layers, and upsampling layers, which are optimized for processing spatial (image) and temporal data (video). In this article, we will focus on the convolutional layer and the max pooling layers, which are the core of CNNs.

Posts tagged "Convolutional Neural Network"

Understanding Convolutional Layers in a Convolutional Neural Network

27 Nov 2023 - Convolutional Neural Network and ClassificationA Convolutional Neural Network (CNN) is essentially a feedforward Multi-Layer Perceptron (MLP) that is designed to recognize local patterns and sparsity (not all connections between neurons are active or significant) in input data. Like the MLP, each neuron is connected to others through learnable weights. These weights are adjusted during training to optimize the network's performance for a specific task. The main difference between MLPs and CNNs is that the latter is developed for processing multidimensional data, such as images or videos. Also, CNNs have a more diverse set of specialized layers, including convolutional layers, pooling layers, and upsampling layers, which are optimized for processing spatial (image) and temporal data (video). In this article, we will focus on the convolutional layer and the max pooling layers, which are the core of CNNs.

Posts tagged "Decision Tree"

The Theory Behind Tree Based Algorithms

30 Apr 2024 - Decision Tree and Random ForestA decision tree is a recursive model that employs partition-based methods to predict the class for each instance. The process starts by splitting the dataset into two partitions based on metrics like information gain for classification or variance reduction for regression. These partitions are further divided recursively, until a state is achieved in which most instances within a partition belong to the same class (for classification) or have similar values (for regression). Decision trees also can be extended into ensemble models such as random forests, which combine multiple trees to improve predictive accuracy and reduce overfitting.

Analysis of Taxi Trip Duration in NYC

22 Jan 2024 - Regression, XGBoost, Decision Tree, and K-means clusteringIn this project, we will build a machine learning model to predict the trip duration of taxi rides in New York City. The dataset is from Kaggle. The original dataset have approximately 1.4 million entries for the training set and 630k for the test set, although only the training set is used in this project. The dataset is then preprocessed, outliers are removed, and new features are created, followed by splitting it into train, test, and validation sets. The goal is to predict the trip duration of taxi trips in New York City. The evaluation metric used for this project is the Root Mean Squared Logarithmic Error (RMSLE). The RMSLE is calculated by taking the log of the predictions and actual values. This metric ensures that errors in predicting short trip durations are less penalized compared to errors in predicting longer ones. For this purpose, we employ three types of machine learning models: mini-batch k-means to create new cluster features for the locations, and the Decision Tree Regressor and XGBoost to predict the trip duration.

Analysis of Diabetes Indicators

13 Dec 2023 - Classification, Random Forest, Decision Tree, and Logistic RegressionDiabetes is among the most prevalent chronic diseases in the United States, impacting millions of Americans each year. Diabetes is a chronic disease in which individuals lose the ability to effectively regulate levels of glucose in the blood, and can lead to reduced quality of life and life expectancy. This project uses the Diabetes Health Indicators dataset, available on kaggle. The project seeks answer the following questions: Which risk factors most strongly predict diabetes? Can a subset of the risk factors to accurately predict whether an individual has diabetes?

Posts tagged "K-means clustering"

Analysis of Taxi Trip Duration in NYC

22 Jan 2024 - Regression, XGBoost, Decision Tree, and K-means clusteringIn this project, we will build a machine learning model to predict the trip duration of taxi rides in New York City. The dataset is from Kaggle. The original dataset have approximately 1.4 million entries for the training set and 630k for the test set, although only the training set is used in this project. The dataset is then preprocessed, outliers are removed, and new features are created, followed by splitting it into train, test, and validation sets. The goal is to predict the trip duration of taxi trips in New York City. The evaluation metric used for this project is the Root Mean Squared Logarithmic Error (RMSLE). The RMSLE is calculated by taking the log of the predictions and actual values. This metric ensures that errors in predicting short trip durations are less penalized compared to errors in predicting longer ones. For this purpose, we employ three types of machine learning models: mini-batch k-means to create new cluster features for the locations, and the Decision Tree Regressor and XGBoost to predict the trip duration.

Posts tagged "Logistic Regression"

Analysis of Diabetes Indicators

13 Dec 2023 - Classification, Random Forest, Decision Tree, and Logistic RegressionDiabetes is among the most prevalent chronic diseases in the United States, impacting millions of Americans each year. Diabetes is a chronic disease in which individuals lose the ability to effectively regulate levels of glucose in the blood, and can lead to reduced quality of life and life expectancy. This project uses the Diabetes Health Indicators dataset, available on kaggle. The project seeks answer the following questions: Which risk factors most strongly predict diabetes? Can a subset of the risk factors to accurately predict whether an individual has diabetes?

Posts tagged "Multilayer Perceptron"

Uncertainty Estimation for Taxi Trip Duration Predictions in NYC

29 Jun 2024 - Multilayer Perceptron, Bayesian Neural Network, and RegressionThis project aims to analyze taxi trip durations in NYC for the year 2023. The dataset contains almost 35 million records. We perform brief preprocessing to remove outliers and errors, using DuckDB and SQL to extract 2 million representative records. After preprocessing, we use this data to train three models: a simple neural network without uncertainty estimation, a Bayesian neural network (BNN), and a dual-headed Bayesian neural network (Dual BNN). We compare these models, highlighting that the Dual BNN can vary its uncertainty estimates based on the input data, unlike the simple BNN which estimates a constant level of uncertainty, and the simple neural network which provides only point estimates. The Dual BNN's ability to provide varying uncertainty estimates makes it particularly advantageous for predicting taxi trip durations, as it offers more informative and reliable predictions, crucial for effective decision-making in real-world applications.

Solving the 1D Burgers' Equation with Physics-Informed Neural Networks (PINNs)

15 Jul 2023 - Multilayer Perceptron and Physics-Informed Neural NetworkThis project aims to solve the one-dimensional Burgers' equation using a Physics-Informed Neural Network (PINN). The Burgers' equation is a fundamental partial differential equation (PDE) in applied mathematics, used to model phenomena in fluid mechanics, nonlinear acoustics, and gas dynamics

Posts tagged "Physics-Informed Neural Network"

Solving the 1D Burgers' Equation with Physics-Informed Neural Networks (PINNs)

15 Jul 2023 - Multilayer Perceptron and Physics-Informed Neural NetworkThis project aims to solve the one-dimensional Burgers' equation using a Physics-Informed Neural Network (PINN). The Burgers' equation is a fundamental partial differential equation (PDE) in applied mathematics, used to model phenomena in fluid mechanics, nonlinear acoustics, and gas dynamics

Posts tagged "Probability"

Probabilities and Bayes Rule

22 Nov 2023 - Probability and BayesHere, I explain the basics of probabilities and Bayes' rule, aiming to provide an intuitive understanding of probabilities through the concept of set theory. Each section includes an example to illustrate practical comprehension of probabilities.

Posts tagged "Random Forest"

The Theory Behind Tree Based Algorithms

30 Apr 2024 - Decision Tree and Random ForestA decision tree is a recursive model that employs partition-based methods to predict the class for each instance. The process starts by splitting the dataset into two partitions based on metrics like information gain for classification or variance reduction for regression. These partitions are further divided recursively, until a state is achieved in which most instances within a partition belong to the same class (for classification) or have similar values (for regression). Decision trees also can be extended into ensemble models such as random forests, which combine multiple trees to improve predictive accuracy and reduce overfitting.

Analysis of Diabetes Indicators

13 Dec 2023 - Classification, Random Forest, Decision Tree, and Logistic RegressionDiabetes is among the most prevalent chronic diseases in the United States, impacting millions of Americans each year. Diabetes is a chronic disease in which individuals lose the ability to effectively regulate levels of glucose in the blood, and can lead to reduced quality of life and life expectancy. This project uses the Diabetes Health Indicators dataset, available on kaggle. The project seeks answer the following questions: Which risk factors most strongly predict diabetes? Can a subset of the risk factors to accurately predict whether an individual has diabetes?

Posts tagged "Regression"

Uncertainty Estimation for Taxi Trip Duration Predictions in NYC

29 Jun 2024 - Multilayer Perceptron, Bayesian Neural Network, and RegressionThis project aims to analyze taxi trip durations in NYC for the year 2023. The dataset contains almost 35 million records. We perform brief preprocessing to remove outliers and errors, using DuckDB and SQL to extract 2 million representative records. After preprocessing, we use this data to train three models: a simple neural network without uncertainty estimation, a Bayesian neural network (BNN), and a dual-headed Bayesian neural network (Dual BNN). We compare these models, highlighting that the Dual BNN can vary its uncertainty estimates based on the input data, unlike the simple BNN which estimates a constant level of uncertainty, and the simple neural network which provides only point estimates. The Dual BNN's ability to provide varying uncertainty estimates makes it particularly advantageous for predicting taxi trip durations, as it offers more informative and reliable predictions, crucial for effective decision-making in real-world applications.

Analysis of Taxi Trip Duration in NYC

22 Jan 2024 - Regression, XGBoost, Decision Tree, and K-means clusteringIn this project, we will build a machine learning model to predict the trip duration of taxi rides in New York City. The dataset is from Kaggle. The original dataset have approximately 1.4 million entries for the training set and 630k for the test set, although only the training set is used in this project. The dataset is then preprocessed, outliers are removed, and new features are created, followed by splitting it into train, test, and validation sets. The goal is to predict the trip duration of taxi trips in New York City. The evaluation metric used for this project is the Root Mean Squared Logarithmic Error (RMSLE). The RMSLE is calculated by taking the log of the predictions and actual values. This metric ensures that errors in predicting short trip durations are less penalized compared to errors in predicting longer ones. For this purpose, we employ three types of machine learning models: mini-batch k-means to create new cluster features for the locations, and the Decision Tree Regressor and XGBoost to predict the trip duration.

Posts tagged "XGBoost"

Analysis of Taxi Trip Duration in NYC

22 Jan 2024 - Regression, XGBoost, Decision Tree, and K-means clusteringIn this project, we will build a machine learning model to predict the trip duration of taxi rides in New York City. The dataset is from Kaggle. The original dataset have approximately 1.4 million entries for the training set and 630k for the test set, although only the training set is used in this project. The dataset is then preprocessed, outliers are removed, and new features are created, followed by splitting it into train, test, and validation sets. The goal is to predict the trip duration of taxi trips in New York City. The evaluation metric used for this project is the Root Mean Squared Logarithmic Error (RMSLE). The RMSLE is calculated by taking the log of the predictions and actual values. This metric ensures that errors in predicting short trip durations are less penalized compared to errors in predicting longer ones. For this purpose, we employ three types of machine learning models: mini-batch k-means to create new cluster features for the locations, and the Decision Tree Regressor and XGBoost to predict the trip duration.